As global energy trade accelerates its digital transformation, data has become the core productive factor in enterprise operations and decision-making. As a leading player in international oil trading, Z Corporation has built a vast business network. It spans crude oil, refined products, natural gas, and chemicals. Its annual trade volume exceeds tens of millions of tons. The trade value surpasses hundreds of billions of U.S. dollars. Each day, Z Corporation trades over one million barrels. These trades cover all major global energy markets.

However, the scale of Z Corporation’s business has brought with it significant data processing challenges. The company processes approximately 20 million rows of data each day, and its legacy technical infrastructure has revealed limitations such as low processing efficiency, high costs, and poor scalability. To address these challenges, Z Corporation deployed the X-Ray data governance and mining platform, leveraging distributed storage and parallel computing to manage massive data volumes efficiently and respond in real time—dramatically enhancing its data value conversion capabilities.

I. Data Challenges Faced by Z Corporation

With ongoing business expansion, Z Corporation’s existing data system struggled to handle tens of millions of rows per day, exposing several critical issues:

1.Low Processing Efficiency

Traditional tools could not support large-scale data operations. Loading, querying, and organizing data were time-consuming, affecting employee productivity and business response times.

2.High Storage Costs

The rapid growth in data volume required constant expansion, relying on high-performance servers and cloud resources, leading to uncontrollable costs. Managing heterogeneous data also posed difficulties.

3.Poor Scalability and Stability

With exponential data growth, the system experienced bottlenecks during peak hours, reducing system stability and user experience.

4.Difficulties Ensuring Data Quality

Data from multiple sources was complex, with frequent duplication, errors, and omissions—negatively impacting analytical accuracy and decision reliability.

5.Insufficient Real-Time Processing Capabilities

In commodity trading, real-time data is essential. The original architecture struggled to support fast decision-making and timely risk control.

6.Low Data Utilization Rates

Due to lengthy data cleansing and organization processes, business users had little time for analysis, leading to underutilized data value.

To resolve these issues, Z Corporation urgently needed a platform capable of handling massive data, supporting high concurrency, offering scalability, and ensuring high availability. After evaluation, Z Corporation selected the X-Ray platform for its robust large-scale data processing capabilities. The following sections outline its core technical architecture and processing strengths.

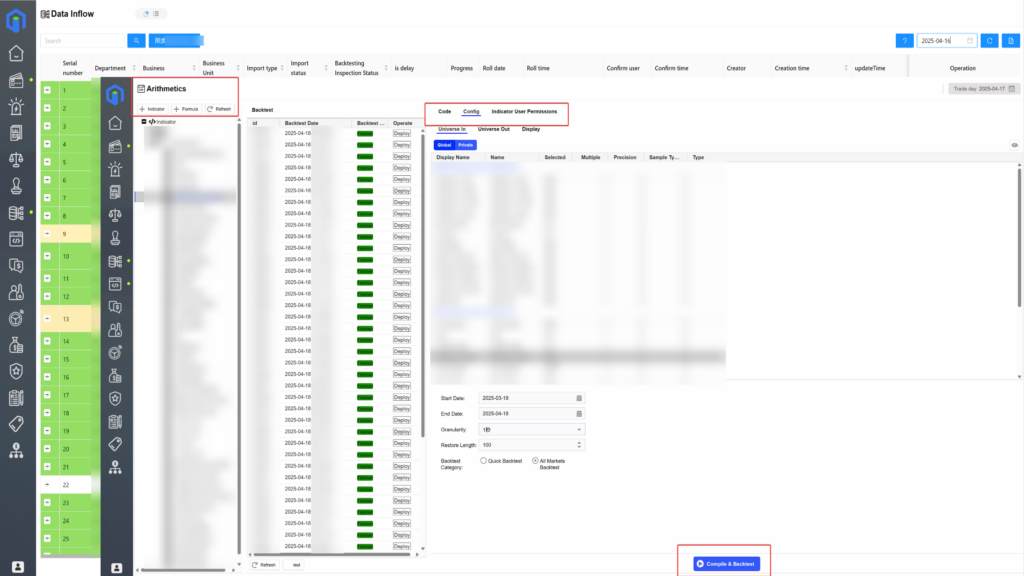

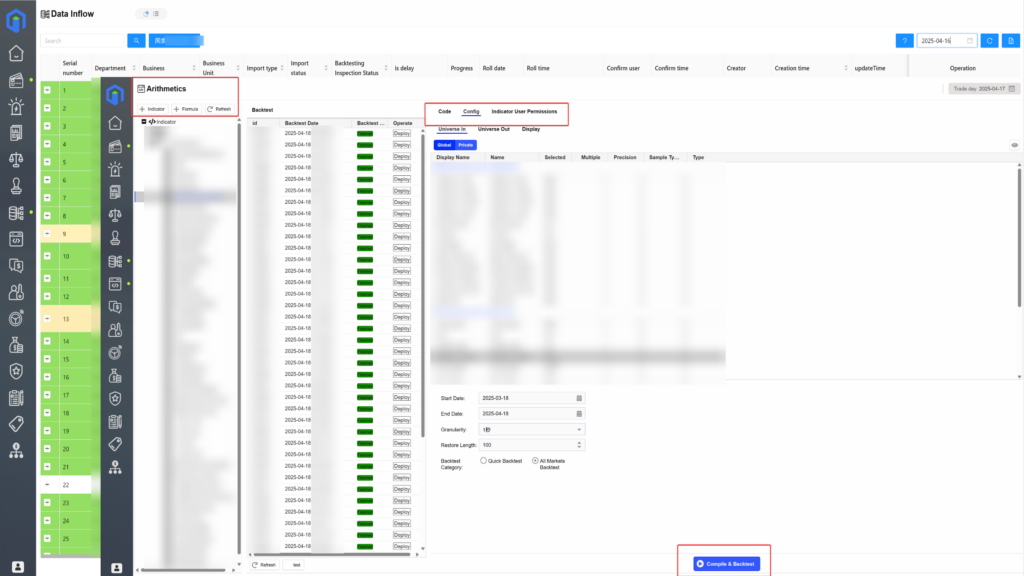

II. X-Ray’s Distributed Data Processing Solution

To fundamentally resolve Z Corporation’s data processing challenges, X-Ray established a distributed architecture that combines relational and non-relational databases into a comprehensive data processing solution. This approach ensures system performance, reliability, and scalability while controlling long-term operational costs—providing enterprises with a stable, flexible, and efficient big data processing capability.

1. Hybrid Distributed Architecture with MySQL + NoSQL

At the infrastructure level, X-Ray integrates MySQL with its proprietary NoSQL distributed storage system, leveraging each for different data scenarios:

- MySQL handles structured data with high transactional integrity and strong consistency, suitable for business-critical systems requiring data accuracy.

- NoSQL supports unstructured data and high-concurrency read/write operations, offering horizontal scalability ideal for real-time queries and log analysis.

This hybrid setup accommodates Z Corporation’s coexisting structured and unstructured data, improving system adaptability and processing efficiency across diverse tasks.

| Comparison Dimension | MySQL (Relational DB) | NoSQL (Non-relational DB) |

|---|---|---|

| Data Model | Tabular structure with strict fields | Flexible structures (key-value, document, column family, graph) |

| Data Type Support | Structured data | Unstructured/semi-structured (JSON, XML, binary) |

| Consistency Model | Strong consistency, ACID support | Eventual consistency, CAP-compliant (AP/CP) |

| Query Language | Standard SQL | Non-SQL (e.g., MongoDB syntax) |

| Performance Traits | Strong single-node transactional capacity | High concurrent read/write on multi-nodes |

| Scalability | Vertical scaling | Horizontal scaling with rapid node expansion |

| Cost Structure | High-performance hardware required | Deployable on commodity servers, flexible cost |

| Typical Scenarios | Core trading, finance, inventory | Logs, recommendation engines, real-time analytics |

To enhance performance, X-Ray incorporates high-performance SSD/NVMe storage media at the foundational level. This significantly accelerates data access and reduces latency during high-concurrency tasks. MySQL and NoSQL each handle specialized tasks efficiently, while the high-performance storage underpins system-wide throughput. This dual approach—architectural optimization + storage acceleration—provides a notable performance uplift.

2. Automatic Load Balancing and Elastic Scaling

X-Ray features an internal load-balancing mechanism that dynamically distributes data and computation tasks across multiple storage and processing nodes based on real-time loads, preventing bottlenecks.

The platform also supports horizontal elasticity. When traffic surges or node failures occur, the system automatically scales resources and adds nodes as needed—ensuring uninterrupted services and high availability. This guarantees system resilience and responsiveness as Z Corporation’s business scales.

3. Efficient Compression Algorithms Improve Storage Utilization

To control costs and boost storage efficiency, X-Ray uses high-performance data compression algorithms. The system converts incoming data into compressed binary formats before storage. This mechanism significantly reduces space usage without compromising read performance, providing robust support for Z Corporation’s growing data volumes.

4. Parallel Computing and MapReduce for Massive Data Workloads

At the processing layer, X-Ray utilizes parallel computing and the MapReduce model to enhance handling of massive data workloads. The engine breaks tasks into subtasks and executes them in parallel across multiple processor cores or distributed nodes.

X-Ray also uses data partitioning strategies based on business logic, enabling localized task scheduling and concurrent processing. This improves resource efficiency and system responsiveness under high-concurrency, multi-scenario conditions.

By combining parallel computing and data partitioning, X-Ray ensures that Z Corporation can consistently and efficiently process over 20 million rows of data per day—supporting rapid business expansion.

After the comprehensive implementation of the above architecture and mechanisms, Z Company has achieved a significant leap in both data processing capabilities and business support. The following sections will systematically demonstrate the implementation outcomes and value realization of X-Ray, starting from the core issues.

III. Implementation Results: A Leap Forward in Z Company’s Data Governance

Following the launch and operation of X-Ray, Z Company saw remarkable improvements in data processing efficiency, system stability, and data utilization. The team effectively addressed each core issue one by one.Below are brief explanations of key problems, solutions, and their actual outcomes:

1. Significant Improvement in Data Processing Efficiency

Problem: Processing pressure from 20 million rows of data per day, with low efficiency and long processing times.

Solution: A hybrid distributed architecture (MySQL + NoSQL) was used to separately handle different types of data, supported by parallel computing and MapReduce task segmentation, along with automatic load balancing and high-performance storage to optimize overall processing efficiency.

Outcome: Data processing speed increased by 60%, and total task duration was reduced to one-third. Daily processing time dropped from several hours to under one hour.

2. Effective Reduction of Storage Management Costs

Problem: Massive data volumes led to high storage costs and resource waste.

Solution: Binary compression algorithms and hot-cold data tiered management were used to reduce physical space usage, combined with an elastic resource scheduling mechanism to improve disk utilization.

Outcome: Overall IT resource costs were reduced by about 30%, with a significant reduction in reliance on high-spec servers and external storage.

3. Significant Enhancement in System Scalability and Stability

Problem: The system struggled to adapt to business growth, with frequent crashes and lags during peak periods.

Solution: A distributed architecture was adopted to support horizontal scaling and hot failover, with automatic load balancing ensuring system stability under high concurrency.

Outcome: The system stably handled over 20 million rows of data daily. During peak business periods, it ran smoothly, and the failure rate dropped significantly.

4. Improved Data Quality and Accuracy

Problem: Inconsistent and uneven-quality data from multiple sources hindered analytical decision-making.

Solution: Leveraging MySQL’s strong consistency and a multi-source validation mechanism, a “closed-loop governance model” was built to control data quality right from the point of entry.

Outcome: Data accuracy improved by about 40%, and the need for repeated validation and backtracking was greatly reduced.

5. Enhanced Real-Time Processing Capability

Problem: The original system responded too slowly in trading and risk control scenarios, posing latency risks.

Solution: NoSQL’s high-concurrency read-write capabilities and a parallel task scheduling mechanism were used to strengthen real-time data processing capabilities.

Outcome: Real-time processing capabilities improved by about 50%, meeting the low-latency data needs of high-frequency business scenarios.

6. Increased Data Utilization Efficiency, Unlocking Data Value

Problem: Data was scattered and cumbersome to process, making efficient decision-making difficult.

Solution: A unified architecture was used to integrate heterogeneous data, combined with automated archiving, data scheduling, and resource optimization mechanisms to reduce manual intervention and improve data access and analysis efficiency.

Outcome: Trading and risk control personnel were freed from tedious data processing and could focus more on business insights and strategy formulation. Data analysis efficiency increased by about 70%.

Through the above multi-dimensional improvements, Z Company not only significantly enhanced its data processing efficiency and system stability but also comprehensively resolved long-standing issues such as high storage costs, poor data quality, and weak real-time capabilities. With the support of X-Ray, its data governance capabilities have reached a new level, laying a solid foundation for efficient operations and agile responsiveness.

IV. Outlook: From Data Processing to Value Extraction

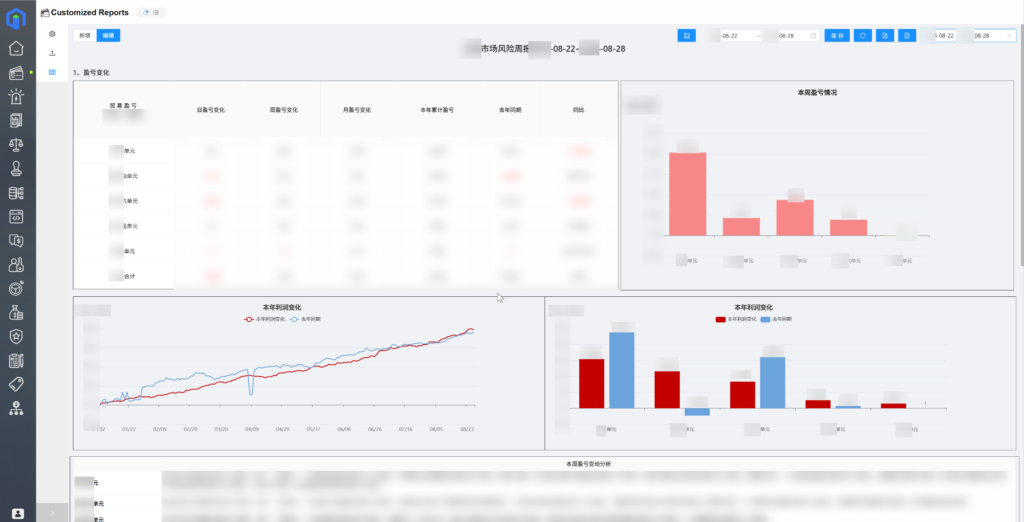

The successful deployment of X-Ray not only enabled Z Company to build a robust and efficient large-scale data governance architecture but also laid the groundwork for continuous data value realization. On this basis, X-Ray has gradually expanded to more business scenarios, including data visualization reports, risk control alerts, and business dashboards, helping Z Company move from being “data-driven” to achieving “intelligent decision-making.”

Looking ahead, as cooperation between the two parties continues to deepen, X-Ray will further leverage its technical strengths in massive data mining and high-value data utilization, providing a stronger digital foundation for Z Company’s global trade operations and comprehensively empowering its leading position in the energy trading sector.